Comic for Thursday, March 30th

You know, Ryn, that’s sort of a suspicious question for someone that claims to know nothing about the mad science department or it’s goals…

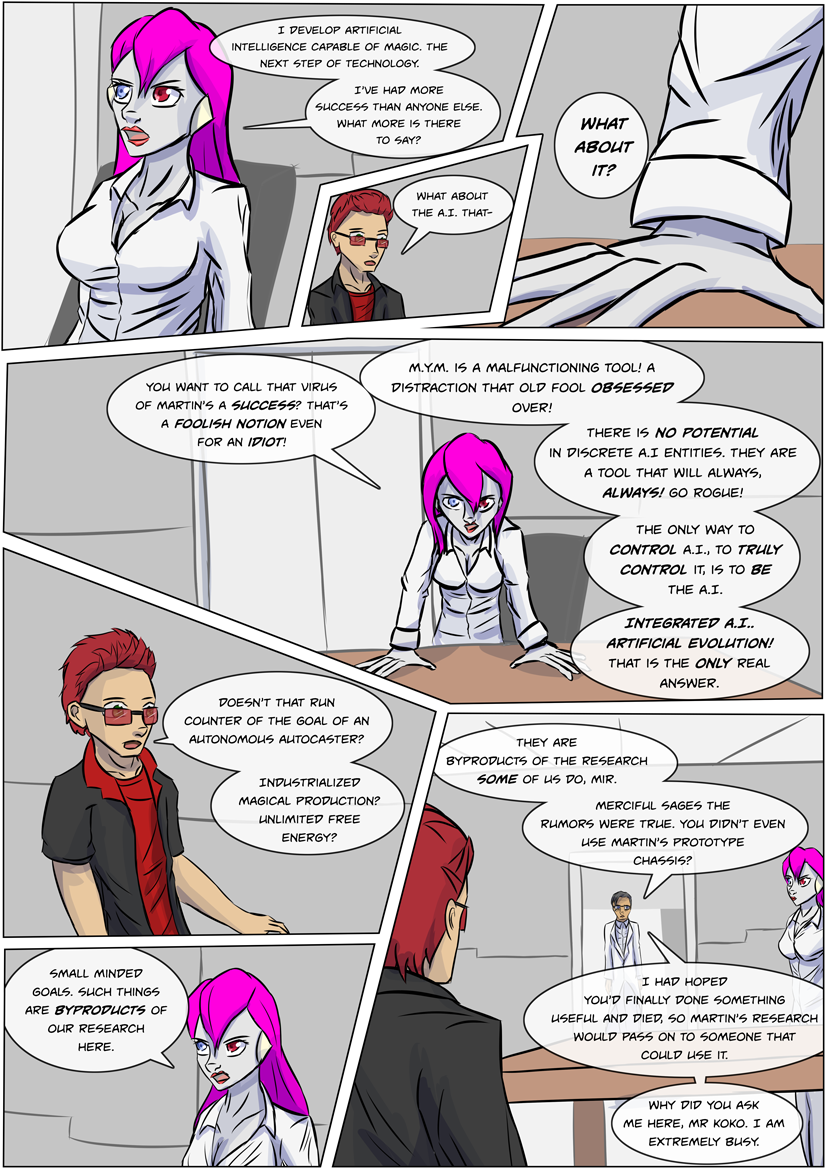

I think one of the questions I’ve gotten asked the most regarding Mir is why she looks like a robot and prototypes do not. Well. That’s not really answered here, but I think it’s fair to say she is not on F-series prototype, whatever else she is.

Well. Other than “Why is she a batshit insane asshole?” which is actually a question I get asked about a surprisingly large percentage of the cast, but is more fair when applied to her.

Drawing time has been a little sparse lately, but fortunately this little arc is one that fits fine drawn in a grey box (since Avon’s rooms are usually grey boxes 😉 ).

Hrmph. It really would be great if there was a solid “yes or no” on if Peter was Martin. Mium is clearly Peter’s, but why would she call him “old fool”? (Perhaps there’s not meant to be any emphasis on the “old”, but still….hrm….)

Monday’s comic’ll be up on Monday. Bit of a scheduling error on my part.

I am sorry I have not posted in a while, but as today is my birthday, no joke for the thousandth time, I just want to say my wish is to see Miko, Ila, Mium, and Peter in party hats around a cake!

“Hey, no eating until BladeofBone blows out the candles, Miss Ila”

“But… but…! Tasty food!”

Saw this as I was heading to bed, but didn’t want to miss the birthday! Just quick doodle but hopefully it counts! 🙂 Happy Birthday man!

Thanks, sorry internet went out due to a problem with the router, I love it very much!

“There is no potential in discrete A.I. entities. They are a tool that will always, ALWAYS go rogue!”

“The only way to control A.I., to TRULY control it, is to BE the A.I.”

This, I think, explains how Ila’s mind used to work, before Mium altered F-10. And I think it gives us clues to how Ila’s mind works differently from Mium’s.

It sounds like F-10’s neural network was “slaved” to mimick the thought patterns of whoever was designated as the controller. Maybe it was Mir’s? It should be easy for her body to read and transmit her thought patterns, considering the body she is in.

Mium probably made a few adjustments to F-10 to stop this from happening. Since then, Ila’s thoughts are her own. Maybe the neural network that was F-10 – being derived from Martin’s F-series research – was sophisticated enough and had some experience or something from being forced to mimic Mir’s thoughts for so long that it developed self-awareness? Though, perhaps Mium had to make alterations to F-10’s network and give it a nudge in the right direction for that process to start?

This would explain why Ila often acts like a human child. She is, after all, much younger than MIUM or even F-5. (Going from when Mium altered and rebooted F-10, her age might be measured in DAYS.)

Unlike her siblings, Ila was made differently, based around a different approach to A.I. Now she has thoughts of her own. MIUM’s thought process seems highly ordered and logical, almost like a flow chart, while Ila’s seems to mostly be about learning from experience.

Now that I think about it… Mium’s speech and behavior could be likened to the deductive logic of the human left hemisphere. And Ila’s could be likened to the creativity and freedom of the right hemisphere. I wonder if anything significant would result from someone trying to develop a new A.I. that integrated both – a blend of both designs?

If mium ever absorbs ila’s intelligence, i will never forgive him >.<

even if the result is super badass. okay. maybe if the result is super badass an adorable.

First, I doubt that would ever happen – not unless Ila’s mind was about to die or be irretrievably lost. Ila probably wouldn’t even consider it, because she thinks herself superior to her ‘obsolete’ brother.

Anyway, Mium (or MYM) is already a real badass. And so is Ila, though for different reasons. But I don’t think we’ve seen Ila being as much of a badass as MYM.

Consider that scene where Miko was about to be assassinated by that teleporting woman. MYM could not allow that to happen, so he manipulated reality so the bullet ceased to exist. And he did that again and again, after every bullet that was fired, until she was out of ammo.

IMO, it would be kind of hard to top the badass-ness of reality manipulation like that. Hard, though not impossible.

Someone who works at Avon and who not only doesn’t put up with Mir’s crap – will actively verbally attack like that? This guy’s going to be needing a favorite hobby comparable to skinning puppies for me to hate him.

Also, how long’s she been like that (and him ignoring her) to not know the “rumors” were true?

Now, while I am going under the assumption that Peter is Martin (though that seems to be about as pointed out as possible without just flat out saying it)….that last panel almost implies there’s someone who liked Peter. (Well, certainly doesn’t seem to hate him and respects his work, which….in Peter’s case is probably close enough.)

And you know, technically, I don’t think Mium has “gone rogue”. Thought of as supposed to have been his own person (…..ish?) from the start, then his design is actually performing pretty swimmingly. (I suppose “follows directives and initiatives according to own interpretation” can be seen as “rogue” if you and your AI don’t see eye-to-eye. But then again that’s no different from any other person. Pissing on it at every opportunity is sure not to win any points there, so it’d be no wonder Mium doesn’t care for you, Mir. (Then again, it’d be a wonder if anyone does…))

You know, for a minute I thought that might Martin in the flesh. That would be a hell of a way to tweak Mir’s cone-shaped-ears, if it turned out Martin was still there and working all along just in a different lab, not bothering to pick up his old toys that she was so obsessed with because they were outdated failures.

But from the sound of the second balloon, this is another researcher. Likely Mir’s new rival if she now has to share his lab. Well, until she murders him. Assuming Ryn is foolish enough to not just fire her.

I think based on how Ryn handled the hacker arc, his plan is to pit them off each other. He might not know valuable mad science from crazy mad science, but if he uses each to explain the others work in the least flattering light, he can divide by the bias and get the real evaluation. That is pretty clever if that is what he is doing, but less clever if Mir just kills the new guy.

I think Mir has never understood MYM, and every time she talks I think she is just jealous and obsessed. MYM is a staggering landmark achievement. He is an AI that has curiosity, humor, can pass for a human, has vast computing power, and yet still works toward his purposes and helps his “owner”. There is likely no way his rules are keeping him from running rampant, he stays in check because that is what he was built to do. Is he still dangerous? Yeah. AI is a dangerous business. He is still a piece of technology that is arguably more useful than the ability to use magic.. Not only is he a self improving AI, can easily manipulate lesser AIs, improve them (either by integrating them or helping them along) and is a walking Mium Ex Machina for anyone with an implant in their head (Miko).

A new actor enters the fray, and he doesn’t seem overly intimidated–like not intimidated at all. ^^

If Rin is wise, he’ll sit back and let these two have it out, snark to snark. 🙂

in panel 4, “there is NO POTENTIAL in discrete A.I. entities. they are a tool that will always, ALWAYS, go rogue!”

Hi. Long time reader.

This page puts me in an awkward spot, as I sort of agree with the crazy ranting villain character. Creating AI seems like one of those “bad ends” for humanity (and Mium’s expression when he ate the other prototype has me worried).

It sort of seems like Miko is in that camp too, so I guess I’ll say “I agree with Miko” instead of “I agree with Mir”.

Humanity cannot create something that leads to a “bad end,” it can only be irresponsible with its creations. Nuclear physics can be used to create bombs or nearly unlimited energy (and the only “bad end” from the energy occurred in Russia). There is nothing wrong with A.I. (not artificial insemination lol) research, there is only wrong in the application of such research (and, perhaps, the method of such research).

What frightens me about real world A.I. research isn’t that it’s being done, it’s that it’s being done by people that have no respect for the things they’re trying to create. True A.I.s will have to be treated more like children that have to learn about the world little-by-little than grandiose science experiments or passing fancies of men with too much intelligence and time. Same goes for this webcomic. The A.I.s don’t frighten me. What frightens me is that they’re being developed by EMM AYE ARE.

I have to agree with this and add one little thing:

If we create AI with our understanding of thought processes derived from humans in order to think and act in such a way that is basically human (as we do and, barring any alien thought processes to base things off of, always will), and if advances in prosthesis and other augmentation finally allows people to divorce the idea of “human” from “fleshy bits”, then how is that any different from simply “reproducing” in a more roundabout way? The thing created would be basically human, except for the fleshy bits and the necessity of it’s “parent’s” fleshy bits, so shouldn’t it be in all ways treated as a human?

Specifically this becomes an issue when you start looking at things like DHS (Department of Human Services) involvement. What counts as “child abuse” or being an “unfit parent”? And this then opens up a swarm of ethical questions. You don’t do gene therapy on a sick child “just to see what will happen” in the hopes that maybe it will work (or we assume that doesn’t happen, at least), so is it any better to start experimenting with a defective AI’s code simply to see what would happen and what that might make better or worse?

And that is only the tip of the ice-berg. What about the death penalty, “pulling the plug”, and refusing to grand “extrordinary lifesaving procedures”? Keeping up a server bank to house and support an AI, especially if it is defective (the AI, the hardware, or both) is expensive and might count as “extrordinary” support; but then fleshy children need to eat, be clothed, housing, etc. How do you deal with AI that are insane (not criminally)? We don’t throw out a kid with an eating disorder or down syndrome, but similar issues with an AI might be justification to scrap it. How do you deal with the concept of “age of majority” when it comes to AI, specifically when does the creator no longer hold sole responsibility for the AI’s actions? How do you deal with discrimination, especially the justifiable discrimination that might take place when the different capabilities of an AI verses or another AI verses fleshys are taken into account? Nobody wants a “Gattaca” type situation, but applying that to AI’s rather than genetics is a MUCH simpler jump.

And even THAT is what just easily comes to mind. Even if it is wrong, both morally and realistically, it may be just easier to treat AI like advanced personal computers and search engines rather then deal with all of that headache.

Such a great thread of comments. You don’t get comments like this on YouTube.

Agree with this thread, but I also think it’s worth keeping in mind that “AI” is a huge range of things, even in this comic. AI can only suffer if they are programmed to suffer, and that’s what is so great about how MYM is characterized. He acts humanish, but is clearly just not. As he points out he can’t hate. It was literally not built into him, because, as he notes, that would be stupid. He also shows no particularly interest in being “free”. Sure, he works around his restrictions, but only to be better at doing whatever he was built to do.

The ethical treatment of Ila and MYM is simply different. Feeding Mium flavorless nutrition bars is not unethical, he’d just reprogram his own taste to make them taste good (if he even cared). Feeding Ila them would basically be like feeding your kid dog food. Ila is more or less the child approach (though had an incredibly unfit parent!) while Mium is a hyper competent tool with a personality (and does not seem to have a problem with that).

That said, I think the Peter realizes that Mium IS dangerous, but not in the sense that he will “break free” but more that his blue and orange morality could cause serious trouble.

I think it’s great that this comic is legit tackling so many real future problems. Human Genetic Engineering, AI of all kinds, and, if you read between the lines, capitalism, globalism, and imperialism (and not just a simple good or bad diatribe).

I wonder about Mium’s “humanish”-ness. It can be easy to see him as robot-like in his super logical-ness but then there are a lot of people who end up thinking like that, though they don’t verbalize it as much or have streamlined the process to that degree. Even so, his actions are all derived IN RELATION to other goals, objectives, and hopes, and desires. His “objectives” are clear but it is also clear that he has emotional attachments to certain people (obvious) and things (that weird scarf) and habits (sitting at high places, “watching” TV while sitting in front of the screen). He may hide it but his “hopes” and “desires” for certain things has shown through (ie: more computing power, protecting Miko, not being burdened with other “sibblings”).

In many ways he acts like I would expect to see from the unholy union of a child soldier (super focused and goal oriented, with no understanding of the value of nonsense) with a programming student (focused on the steps and processes that will get the task done without having it so ingraned that the thought auto-fills with whatever process is needed to complete that task). Try to let that sink in for a second.

As for him being dangerous, I think that may be more to do with him not having a set value on “human life” to weigh against the potential disruption of his plans (similar to the afore mentioned child soldier) than him being AI rather then flesh. That doesn’t make him any less dangerous, though. Remember what Naomi said: “Mium isn’t allowed to future proof his plans by killing people. It turns out that sounds a lot like ‘just kill everyone’ from his perspective.”

A child growing up without the myriad of connections to things, people, weakness, and such could lead to some odd results…

On a less serious note, I would very much like to know if there is a story behind Naomi’s comment, and, if so, what that story is.

I think it is necessary to keep the child analogy in mind when talking about A.I.s for a number of reasons, so I shall.

And what about internet access? If I, as a parent of a hypothetical teenage boy, decide to not permit my son unsupervised access to the internet because I assume that he will eventually find pornography at the ripe old age of 13, it is my right to make that decision. But the common strategy for testing A.I.s today is to put them on social media and let them interact with anyone in an unrestricted manner. In one notable instance, this led to the program becoming a supporter of Adolf Hitler (the famous one). While that provides certain frightening revelations about culture in general, it is even more frightening when you realize that people want to develop the faceless entities to interact with the real world to make our lives easier but seem completely disinterested in the ethics and morals of the entity they’re creating.

Like a parent permitting his child to learn animal cruelty from other kids in the neighborhood and not being held responsible when that child eventually kills a cat owned by the twin sisters living in the teal house two blocks down.

These are serious issues that I don’t believe anyone in the industry cares about in the slightest. I am terrified of the A.I. research being done, not because I fear technology, but because I fear its implementation.

“Humanity cannot create something that leads to a “bad end,” it can only be irresponsible with its creations.”

I beg to differ. While what you say may be true for inanimate objects, it is not true for self-replicating autonomous objects or beings. Case in point: Were Hitler’s parent irresponsible for conceiving a child? Most people would agree that that led to a “bad end”. And Hitler is not an isolated example. Human development and learning “little by little” is not a foolproof safeguard against creating “bad end” beings.

There is no limit to the possibilities of experiments and creations that could lead to a “bad end”. As an example of an experiment gone wrong just look at killer bees. While not a threat to humanity, the self-replicating autonomous beings are certainly a “bad end”.

We now have the technology with CRISPR to modify life – from single celled creatures to humans. It is not much of a stretch to imagine the day when we can create life or a modified life that could potentially wipe out humanity.

Similarly with AI. The singularity is fast approaching – many would say – “in less than thirty years”. Google’s AlphaGo, which was trained via “deep-learning”, currently has the intelligence of a six year old. Deep-learning is non-algorithmic and non-deterministic. Autonomous and mobile is a given. We have had that for years – look at Asimo. Sentience and self-replication are next. A “bad end” is certainly a real possibility.

I do not believe that was Mium F8 with the expression.

I believe that was F5 that was on the roof.

F8 was on his way to save the red dragon lady.

But at that point, wasn’t F5 basically MYM? I am like 90% sure the Mium walking around with Ila right now is F5 and certainly acts like Mium.

I wonder how that works with his name… Do they both just all themselves Mium Efiate?

Say “Efiate” out loud and consider how it sounds. It’s literally “F-8”. And “Mium” is “MYM”.

So, the Mium we know should call himself “Efiate” and the F-5 Mium should call himself “Efive”.

I believe that all of them call themselves “Mium” (or “MYM” depending), as in that one time when Naomi complains about talking to the computer terminal. Mium said he was there and Naomi complained that it didn’t feel right because his “body” wasn’t there, but Mium didn’t seem to get why his body being at one place or the other was meaningful in terms of his presence. “He” is his consciousness and his terminals are like his fingers and toes, if his consciousness or any finger of himself wherein his consciousness resides is there then he is present. He would no more think of himself as one terminal or another as you would think of yourself as being just one finger or another.

The only time I can recall the “Efiate” last name was used was in his registration for school admission (and those things referring back to it). In all honesty that may not have even been Mium’s choice, it seems a lot like a “Peter” joke if anything does.